(Updated: Aug 2020)

Whether you are in a large company running marketing campaigns, or in digital analytics, or a data scientist at a tech startup, you probably are on board with the importance of analytical decision-making. Go to any related conference, blog, meet up, industry slack and you will hear many of the following terms: Optimization, AB & Multivariate Testing, Behavioral Targeting, Attribution, Machine Learning, Predictive Analytics, LTV … the list just keeps growing. There are so many terms, techniques, and next big things that it is no surprise that things start to get a little confusing.

If you have taken a look at the Conductrics API, or our admin tools (if you haven’t please contact us for more information), you may have noticed that we use the term ‘agent’ rather than ‘Test’ or ‘optimization’ to describe our main learning method.

Why do we at Conductrics use Agents? Because amazingly, Optimization, AB & Multivariate Testing, Behavioral Targeting, Attribution, Predictive Analytics, Machine Learning, etc. … can all be recast as components of a simple, yet powerful framework borrowed from the field of Artificial Intelligence, the intelligent agent.

Of course we can’t take credit for intelligent agents. I first came across the IA approach in Russell and Norvig’s excellent AI text Artificial Intelligence: A Modern Approach – it’s an awesome book, and I recommend anyone who wants to learn more to go get a copy or check out their online AI course.

I’m in Marketing, why should I care about any of this?

Well, personally, I have found that by thinking about analytics problems as intelligent agents, I am able to instantly see how each of the concepts listed above are related and apply them most effectively, either individually or in concert. Intelligent Agents are a great way to organize your analytics tool box, letting you grab the right tool at the right time. Additionally, since the conceptual focus of an agent is to figure out what action to take, the approach is goal/action rather than data collection/reporting oriented.

The Intelligent Agent

So what is an intelligent agent? You can think of an agent as being an autonomous entity, like a robot, that takes actions in an environment in order to achieve some sort of goal. If that sounds simple, it is, but don’t let that fool you into thinking that it is not very powerful.

Example: Roomba

A simple agent example is the Roomba – the vacuum robot. The Roombas environment is the room/floor it is trying to clean. It wants to clean the floor as quickly as possible. Since it doesn’t come with an internal map of your room, it needs to use sensors to observe bits of information about the room that it can use to build an internal model of the room. To do this it takes some time at first to learn the outline of the room in order to figure out the most efficient way to clean.

The Roomba learning the best path to clean a room is similar, at least conceptually, to your marketing application trying to find the best approach to convert your visitors on your site’s or app’s goals.

The Basics

Lets take a look at a basic components of the intelligent agent and its environment, and walk through the major elements.

First off, we have both the agent, on the left, and its environment, on the right hand side. You can think of the environment as where the agent ‘lives’ and goes about its business of trying to achieve its goals. The Roomba lives in your room. Your web app lives in the environment that is made up of your users.

What are Goals and Rewards?

The goals are what the agent wants to achieve, what it is striving to do. When the agent achieves a goal, it gets a reward based on the value of the goal. So if the goal of the agent is to increase online sales, the reward might be the value of the sale.

Given that the agent has a set of goals and allowable actions, the agent’s task is to learn what actions to take given its observations of the environment – so what it ‘sees’, ‘hears’, ‘feels’, etc. Assuming the agent is trying to maximize the total value of its goals over time, then it needs to select the action that maximizes this value, based on its past experiences after taking that action.

So how does the agent determine how to act based on what it observes? The agent accomplishes this by taking the following basic steps:

- Observe the environment to determine its current situation. You can think of this as data collection.

- Refer to its internal model of the environment to select an action from the collection of allowable actions.

- Take an action.

- Observe of the environment again to determine its new situation. So, another round of data collection.

- Evaluate the ‘goodness’ of its new situation – did it reach a goal, if not, does it seem closer or further away from reaching a goal then before it took the past action.

- Update its internal model on how taking that action ‘moved’ it in the environment and if it helped it get or get closer to a goal. This is the learning step.

By repeating this process, the agent’s internal model of how the environment responses to each action continuously improves and better approximates each actions actual impact.

This is exactly how Conductrics works behind the scenes to go about optimizing your applications. The Conductrics agent ‘observes’ it world by receiving API calls from your application – so information about location, referrer etc.

In a similar vein, the Conductrics agent takes actions by returning information back to your application, with instructions about what the application should with the user.

When the user converts on one of the goals, a separate call is made back to the Conductrics server with the goal information, which is then used to update the internal models.

Over time, Conductrics learns, and applies, the best course of action for each visitor to your application.

Learning and Control

The intelligent agent has two interrelated tasks – to learn and to control. In fact, all online testing and behavioral targeting tools can be thought of as being composed of these two primary components, a learning/analysis component and a controller component. The controller makes decisions about what actions the application is to take. The learner’s task is to make predictions on how the environment will respond to the controller’s actions. Ah, but we have a bit of a problem. The agent’s main objective is to get as much reward as possible. However, in order to do that, it needs to figure out what action to take in each environmental situation.

Explore vs. Exploit

The intelligent agent will need to try out each of the possible actions in order to determine the optimal solution. Of course, to achieve the greatest overall success, poorly performing actions should be taken as infrequently as possible. This leads to an inherent tension between the desire to select the high value action against the need to try seemingly sub-optimal but under explored actions. This tension is often referred to as the “Explore vs. Exploit” trade-off in the bandit and Reinforcement Learning literature and is a part of optimizing in uncertain environments. Really, what this is getting at is that there are Opportunity Costs to Learn (OCL).

To provide some context for the explore/exploit trade-off consider the standard A/B approach to optimization. The application runs the A/B test by first randomly exposing different users to the A/B treatments. This initial period, where the application is gathering information about each treatment, can be thought of as the exploration period. Then, after some statistical threshold has been reached, one treatment is declared the ‘winner’ and is thus selected to be part of the default user experience. This is the exploit period, since the application is exploiting its learning’s in order to provide the optimal user experience.

AB/Multivariate Testing Agent

In the case of AB Testing both the learning and controller components are fairly unsophisticated. The way the controller selects the actions is to just pick one of them at random. If you are doing a standard AB style test then the controller picks from a uniform distribution – all actions have an equal chance of selection.

The learning component is essentially just a report or set of reports, perhaps calculating significance tests. Often there is no direct communication from the learning module to the controller. In order to take advantage of the learning, a human analyst is required to review the reporting, and then based on results, make adjustments to the controller’s action selection policy. Usually this means that the analyst will select one of the test options the ‘winner’, and remove the rest from consideration. So AB Testing can be thought of as a method for the agent to determine the value of each action.

I just quickly want to point out, however, that the AB Testing with analyst approach is not the only way to go about determining and selecting best actions. There are alternative approaches that try to balance in real-time the learning (exploration) and optimization (exploitation). They are often referred to as adaptive learning and control. For adaptive solutions, the controller is made ‘aware’ of the learner and is able to autonomously make decisions based on the most recent ‘beliefs’ about the effectiveness of each action. This approach requires that the information stored in the learner is made accessible to the controller component. You read about how this is done in some detail on this in our post on Thompson Sampling, and our overview of Multi-armed Bandits.

Targeting Agents

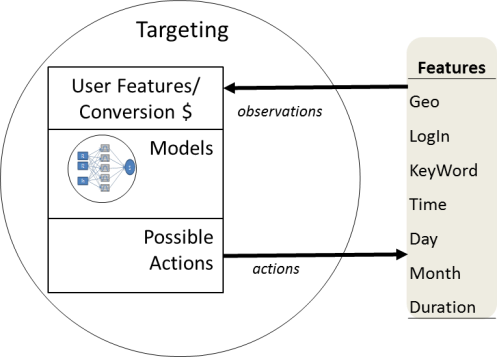

Maybe you call it targeting, or segmentation, or personalization, but whatever you call it, the idea is different folks get different experiences. In the intelligent agent framework, targeting is really just about specifying the environment that the agent lives in.

Let’s revisit the AB Testing agent, but we add some user segments to it.

You can see the segmented agent differs in that its environment is a bit more complex. Unlike before, where the AB Test agent just needed to be aware of the conversions (reward) after taking an action, it now also needs to ‘see’ what type of user segment it is as well.

Targeting or Testing? It is the Wrong Question

Notice that with the addition of segment based targeting, we still need to have some method of determining what actions to take. So targeting isn’t an alternative to testing, or vice versa. Targeting is just when you use a more complex environment for your optimization problem. You still need to evaluate and select the action. In simpler targeting environments, it might make sense to use the AB Testing approach as we did above. Regardless, Targeting and Testing shouldn’t be confused as competing approaches –they are really just different parts of a more general problem.

Ah, well you may say, ‘hey that is just AB Testing with Segments, not behavioral targeting. Real targeting uses fancy math and machine learning – it is a totally different thing.’ Actually, not really. Lets look at another targeting agent, but this time instead of a few user segments, we have a bunch of user features.

Now the environment is made up of many individual bits of information, such that there could be millions or even billions of possible unique combinations. Hmm, it is going to get a little tricky to try to run your standard AB style test here. Too many possible micro segments to just enumerate them all in a big table, and even if you did, you wouldn’t have enough data to learn since most of the combinations would have 1 user at most.

That isn’t too much of a problem actually, because rather than setting up a big table, we can use approximating functions to represent the map between observed features to the value of each action

Machine Learning: Mapping Observed User Features to Actions

Not only can the use of predictive models reduce the size of the internal representation, but it also allows us to generalized to observations that the agent has not come across before. Also we are free to pick whatever functions, models etc. we want here. How we go about selecting and calculating these relationships is often in the domain of Predictive Analytics and Data Science.

Ah, but we still have to figure out how to select the best action. The exploration/exploitation tradeoff hasn’t gone away. If we didn’t care about the opportunity costs to learn, then we could try all the actions out randomly for a time, train our models and then switch off the learning and apply the models. Of course there is a cost to learn, which is why Google, Yahoo! and other Ad targeting platforms, spend quite a bit of time and resources trying to come up with sophisticated ways to learn as fast as possible (For more see our posts on Bandits and Thompson Sampling).

Summary

Many online learning problems can be reformulated as an intelligent agent problem.

Optimization – is the discovery of best action for each observation of the environment in the least amount of time. In other words, optimization should take into account the opportunity cost to learn.

Testing – either AB or Multivariate, is just is one way, of many, to learn the value of taking each action in a given environment.

Targeting – is really just specifying the agent’s environment. Efficient targeting provides the agent with just enough detail so that the agent can select the best actions for each situation is finds itself in.

Predictive Analytics – covers how to specify which internal models to use and how to best establish the mapping between the agent’s observations, and the actions. This allows the agent to predict what the outcome will be for each action it can take.

We didn’t cover attribution and LTV in this post, but our post on Going from AB Testing to AI, extends the agent to handle attribution as a sequential decision processes.

What is neat is that even if you don’t use our Conductrics, intelligent agents are a great framework to arrange your thoughts when solving your online optimization problems.

If you want to learn more please come sign up for a Conductrics account today at Conductrics).