Seemingly simple ideas underpinning AB Testing are confusing. Rather than getting into the weeds around the definitions of p-values and significance, perhaps AB Testing might be easier to understand if we reframe it as a simple ruling out procedure.

Ruling Out What?

There are two things we are trying to rule out when we run AB Tests

- Confounding

- Sampling Variability/Error

Confounding is the Problem Random Selection is a Solution

What is Confounding?

Confounding is when unobserved factors that can affect our results are mixed in with the treatment that we wish to test. A classic example of potential confounding is the effects of education on future earnings. While people who have more years of education tend to have higher earnings, a question Economists like to ask is if extra education drives earnings or if natural ability, which is unobserved, causes how may years of education and amount of earnings people receive. Here is a picture of this:

We want to be able to test if there is a direct causal relationship between education and earnings, but what this simple DAG (Direct Acyclic Graph) shows is that education and earnings might be jointly determined by ability – which we can’t directly observe. So we won’t know if it is education that is driving earnings or if earnings and education are just an outcome of ability.

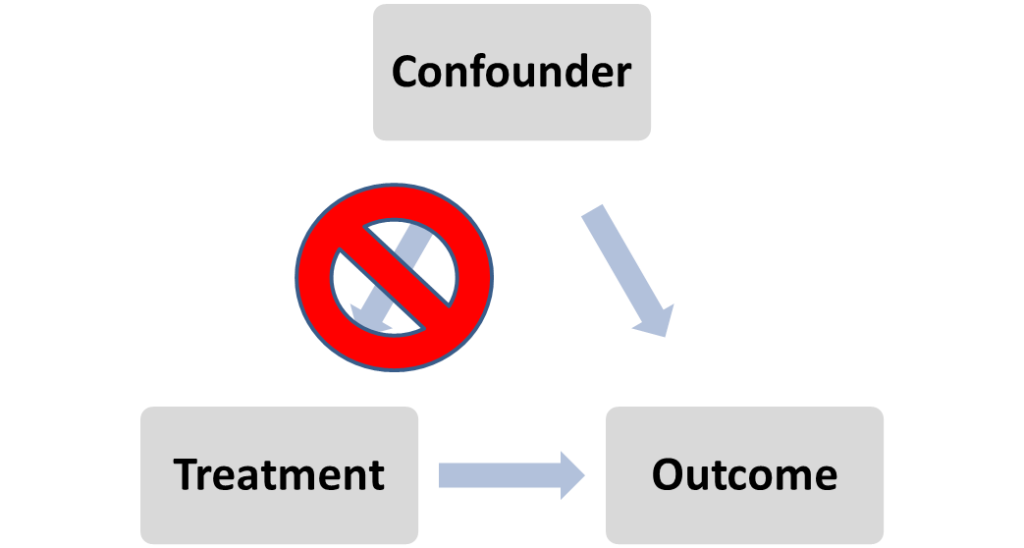

The general picture of confounding looks like this:

What we want is a way to break the connection between the potential confounder and the treatment.

Randomization to the Rescue

Amazingly, if we are able to randomize which subjects are assigned to each treatment we can break, or block, the effect of unobserved confounders and we can make causal statements about the treatment on the outcome of interest.

Why? Since the assignment is done based on random draw, the user, and hence any potential confounder is no longer mixed in with the treatment assignment. You might say that the confounder no longer gets to choose its treatment. For example, if we were able to randomly assign people to education, then high and low ability students each would be just as equally likely to be in the low and high education groups, and their effect on earnings would balance out, on average, leaving just the direct effect of education on earnings. Random assignment lets us rule out potential confounders, allowing us to focus just on the causal relationship between treatment and outcomes*.

So are we done? Not quite. We still have to deal with uncertainty that is introduced whenever we try to learn from sample observations.

Sampling Variation and Uncertainty

Analytics is about making statements about the larger world via induction – the process of observing samples from the environment, then applying the tools of statistical inference to draw general conclusions. One aspect of this that often goes underappreciated is that there is always some inherent uncertainty due to sample variation. Since we never observe the world in its entirety, but only finite, random samples, our view of it will vary based on the particular sample we use. This is reason for the tools of statistical inference – to account for this variation when we try to draw out conclusions.

A central idea behind induction/statistical inference is that we are only able to make statements about the truth within some bound, or range, and that bound only holds in probability.

For example, the true value is represented as the little blue dot But this is hidden from us.

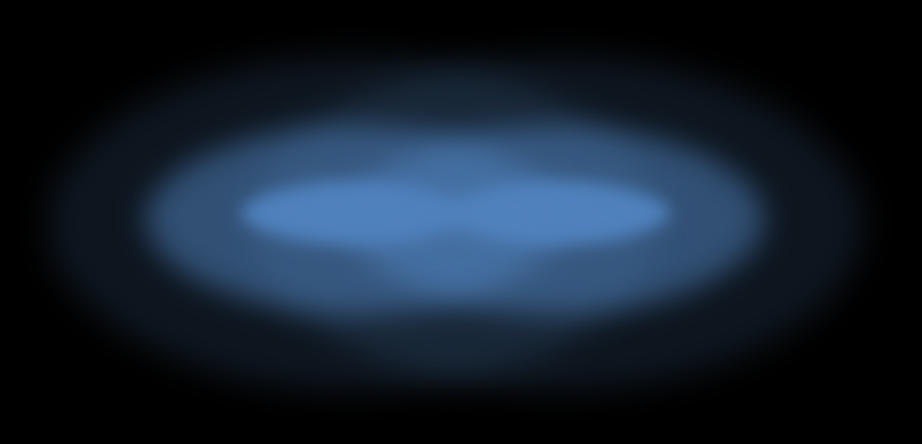

Instead what we are able to learn is something more like a smear.

The smear tells us that the true value of the thing we are interested in will lie somewhere between x and x’ with some P probability. So there is some P probability, perhaps 0.05, that our smear won’t cover the true value.

This means that there are actually two inter related sources of uncertainty:

1) the width, or precision, of the smear (more formally called a bound)

2) the probability that the true value will lie within the smear rather than outside of its upper and lower range.

Given a fixed sample (and a given estimator), we can reduce the width of the smear (make it tighter, more precise), only by reducing the probability that the truth will lie within it – and vice versa, we can increase the probability that the truth will lie in the smear only by increasing (make it looser, less precise) its width. This is a more general concept that the confidence interval is an example of – we say the treatment effect is likely within some interval (bound) with a given probability (say 0.95). We will always be limited in this way. Yes we can decrease the width, and increase the probability that it holds by increasing our sample size, but it is always with diminishing returns [in the order of O(1/sqrt(n)].

AB Tests and P-values To Rule Out Sampling Variations

Assuming we have collected the samples appropriately, and certain assumptions hold, by removing potential confounders there will now be just two potential sources of variation between our A and B interventions:

1) the inherent sampling variation that is always part of sample based inference that we discussed earlier; and

2) a causal effect – the effect on the world that we hypothesize exists when doing B vs A.

AB tests are a simple, formal process to rule out, in probability, the sampling variability. Through the process of elimination if we rule out the sampling variation as the main source of the observed effect (with some P probability), then we might conclude the observed difference is due to a causal effect. The P-value ( the probability of seeing the observed difference, or greater, just due to random sampling) – relates to the probability that we will tolerate in order to rule out that the sampling variation is a likely source for the observed difference.

For example, in the first case we might not be willing to rule out sampling variability, since our smears overlap with one another – indicating that the true value of each might well be covered by either smear.

However in this case, where our smears are mostly distinct from one another, we have little evidence that the sampling variability is enough to lead to such a difference between our results and hence we might conclude the difference is due to a causal effect.

So we look to rule out in order to conclude**

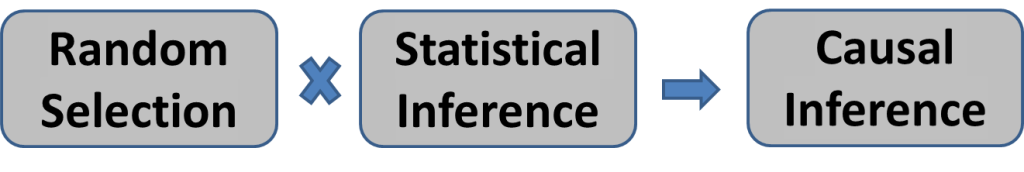

To summarize, causal statements, via AB Tests/RCTs, randomize treatment selections to generate random samples from each treatment in order to block confounding so that we can safely use the tools from statistical inference to make causal statements .

* RCTs are not the only way to deal with confounding. When studying the effect of education on earnings, un able to run RCTs, Economists used the method of instrumental variables to try to deal with confounding in observational data.

**technically ‘reject the null’ – think of Tennis if ‘null’ trips you up – it’s like zero. We ask ‘Is there evidence, after we account for the likely difference due to sampling’ to reject that the difference we see, e.g the observed difference in conversion rate between B and A, is likely due to just sampling variations.

***If you want to learn about other ways of dealing with confounding beyond RCTs a good introduction is Causal Inference: The Mixtape – by Scott Cunningham.